Author: cassidst

Policy Infographics

Helping to make sense of complex student loan policies.

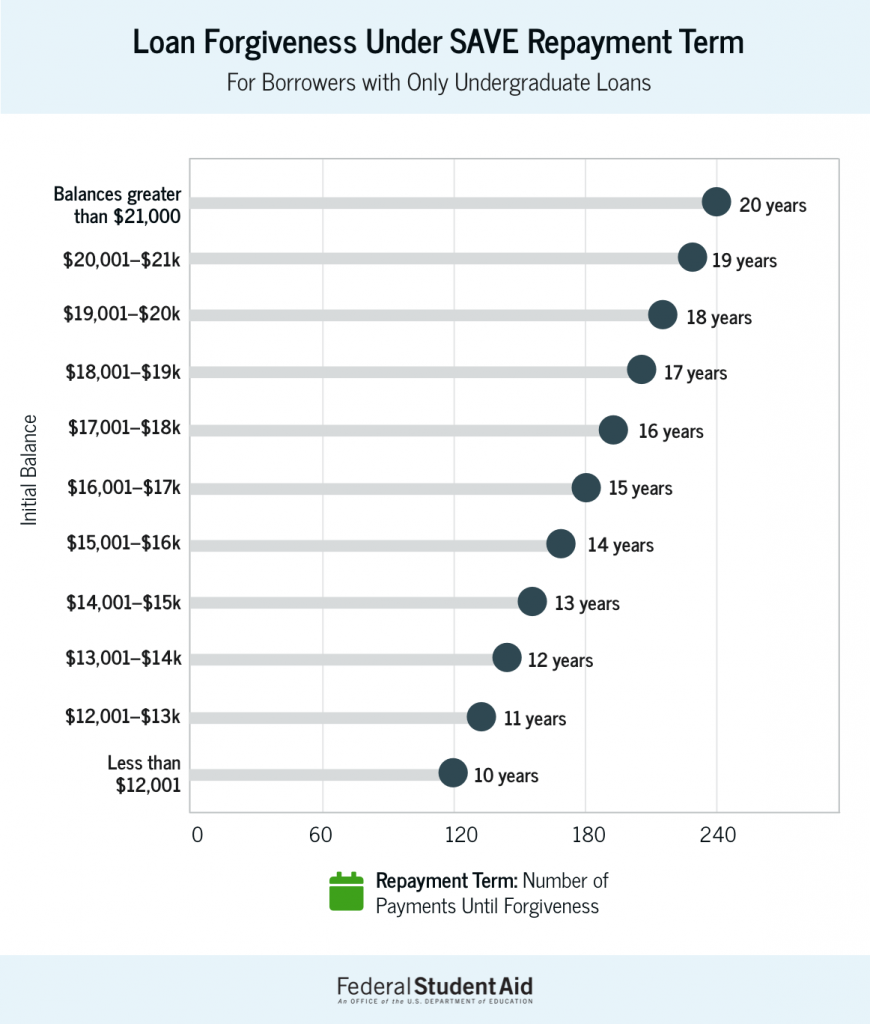

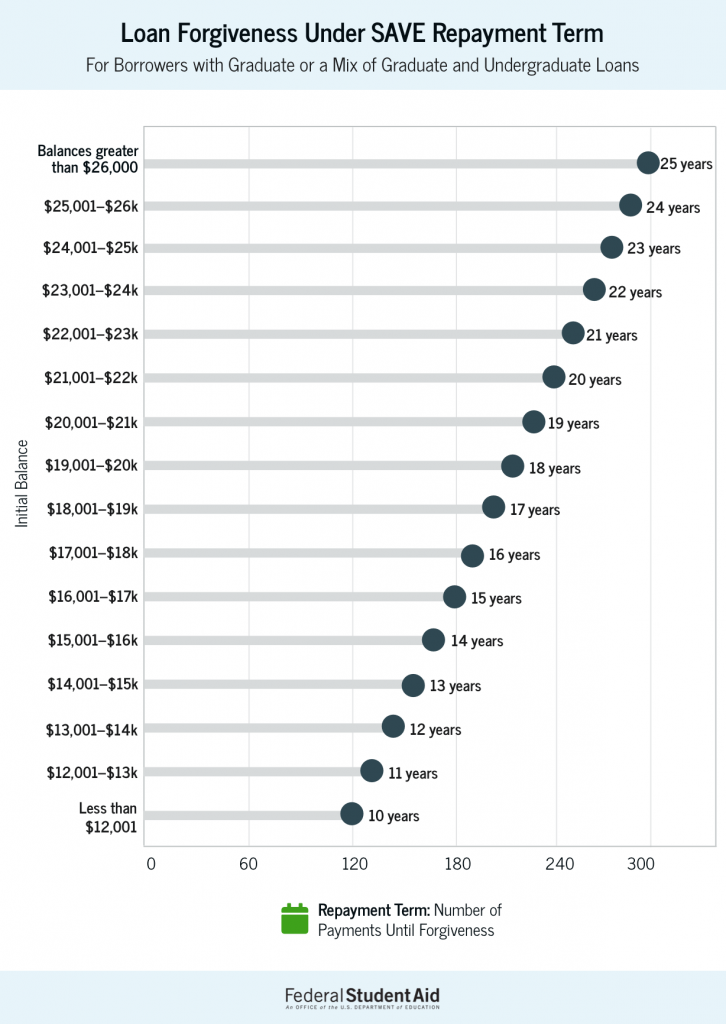

Saving on a Valuable Education (SAVE) Repayment Plan

Student loan borrowers as part of the SAVE plan may be eligible for early forgiveness of their loans. In this graphic, I designed a quick way for student loan borrowers to understand how the policy may affect their early forgiveness amount.

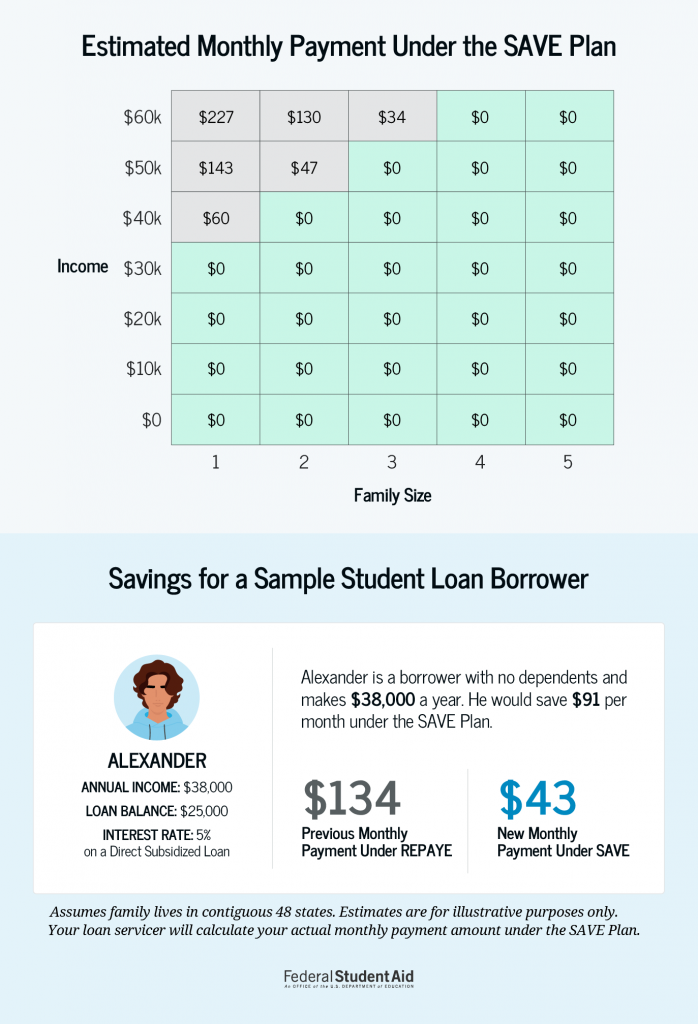

SAVE Repayment Amounts based on Income and Family Size

In this infographic, I developed a way for student loan borrowers to quickly determine where they fall in terms of monthly payment. Under the income-driven repayment plan, you pay you can now buy back certain months in your payment history to make them qualifying payments for PSLF. Specifically, you can buy back months that don’t count as qualifying payments because you were in an ineligible deferment or forbearance status.

Public Service Loan Forgiveness (PSLF)

Under the 10-year public service forgiveness program, a student loan borrower can buy back certain months in their payment history to make them qualifying payments for PSLF. Specifically, you can buy back months that don’t count as qualifying payments because you were in an ineligible deferment or forbearance status. In order to help illustrate that, I created this graphic for the information page on studentaid.gov.

Problem

Federal Student Aid, an office of the Department of Education, manages StudentAid.gov, the main website that supports Title IX higher education funding in the country. One of the most visited federal government sites, StudentAid.gov handles more than 20 million FAFSA (Free Application for Federal Student Aid) submissions per year, and supports other relevant tasks like a personalized dashboard with student’s aid summary, digital, self-paced counseling for student loans, and applications for income-driven repayment plans.

Approach

This was the first top-task usability test to be conducted on the production site. Various individual products and features on StudentAid.gov have been usability tested independently, either during design sprints or after release. This was an opportunity to take a holistic view of the site and incorporate more content-based pages.

Our goals were to:

- Evaluate 10 common tasks and scenarios with users

- Uncover any navigation pain points and unexpected issues

- Test on both desktop and mobile devices

- Evaluate the site’s content in context with top tasks on content pages

We tested with a diversity of students, parents, and student loan borrowers in repayment with different incomes, ethnicities, and geographic location across the U.S.

Results

Key Takeaways

- Most users don’t associate Federal Student Aid with the FAFSA. The term FAFSA has much stronger household recognition than Federal Student Aid, despite Federal Student Aid’s efforts to consolidate most of its program sites into one website.

- The site’s main mega-menu on desktops has room for improvement. A lot of users misunderstood categories such as “Manage Loans” which users thought was more personalized to them.

- When users log in, there is a utility menu that appears. Many users didn’t notice it since it was not prominent in the main navigation area and on mobile, it appears below other sections within the navigation.

- Long content pages meant users often hunted and pecked for information, resulting in longer than expected time on task metrics. Very few users used the site search, despite its prominence.

- The mobile website’s navigation proved difficult for users. Many felt the menu was overwhelming and they couldn’t easy find the logged-in pages, such as Dashboard, My Aid, My Docs, and Settings.

Key Recommendations

- Continue mobile testing as part of a core user research approach

- Redesign the in-page navigation for content pages to better support anchor/jump links within the page and to provide mobile users a better experience

- Improve the mobile website’s navigation and consider how the logged-in links interact with the main site’s navigation. Consider a robust, secondary navigation on logged-in pages.

- Conduct a card sort on the site’s main topics to evaluate the site’s information architecture and navigation schemes. Consider condensing the amount of links in the site’s main navigation.

Lessons learned

- Remote testing with mobile devices proved easier than anticipated. Using Microsoft Teams, we were able to easily have participants share their phone screens

- Top-task testing, outside of feature-based testing, is a helpful activity and should be part of any evaluate user research approach. Developing a cadence for this type of testing insures a regular interval for continuous improvement discovery

This website project was part of a grant with a team of five faculty members at James Madison University with a goal of educating the community on health policy. The goal of the initiative is to educate the community about JMU’s health-related civic engagement in the community and the world.

The Problem

As the lead project designer, I worked with various stakeholders to conduct information gathering sessions. While a relatively new initiative from James Madison University — the Health Policy Collaborative had little visibility and lacked a dedicated website.

Approach

I conducted research which included meeting with faculty, nurse practitioners, state policy experts, and local politicians. These meetings and interviews helped get a sense of what the newly formed group, the Health Policy Collaborative, would want to showcase on the website and how the general public would receive the information.

We learned users’s top tasks and goals as well as clear goals from our stakeholders.

Results

After identifying target audiences and developing user personas, I explored an information organization activity, attempting to piece together the various themes and information needs of the site.

Working inside the JMU content management system was limiting, but we were able to do certain things on the site, like pull in outside news sources. I led an effort to include an interview I conducted with a health policy expert on Medicaid expansion in Virginia.

This relatively short project meant the site’s needs were going to change in the future. My goal for this group was to create them a foundation website, that presented their information clearly and professionally.

This meant creating a information architecture framework that could grow, and not impact the discoverability factor for users.

Considering JMU’s site wasn’t responsive at the time, performance and usability on a phone or tablet still remains an issue.

Lessons learned

- Logistics of scheduling interviews with stakeholders can prolong the time necessary to get information critical for a website.

- Content management systems (CMSs) often have limitations and it’s important to understand the technology behind it in order to not waste time designing something you know will not work.

For the purposes of this example, I use my work on Marriott.com’s booking process. This survey questionnaire was designed for research on Marriott’s digital products.

With any opportunity you have to engage with users, customers, or the general public, it’s important to remember that you are asking for someone’s time. Asking purposeful, relevant questions that lead you to a better understanding of your users is important. Leave the questions that don’t help you learn more behind. A method I use is to write out your questions, then justify to yourself why you want to ask it.

Survey questionnaires help to refine user research goals and questions. The qualitative and quantitative feedback collected will help to assist in the redesign of your product (in my case, the Marriott website and mobile app.) For the purposes of this questionnaire, we are focusing on closed response questions, and looking to gather and collect categorical, behavioral, and attitudinal information from users.

Questions

Introduction

Thank you for agreeing to participate in our survey! Your feedback helps us create more useful and intuitive products. In this survey, we ask a variety of questions based on your hotel booking preferences, perception with rewards programs, and digital booking apps. Your participation is voluntary.

1. How do you most commonly book a hotel room? (check all that apply)

- Marriott.com

- Marriott smartphone app

- Another hotel company’s website or app

- Travel site (Expedia, Priceline, Hotels.com, etc.)

- Travel agent or travel company (online or by phone)

- Other [type other answer]

Why ask this question? This questions is an easy introductory questions that helps us gather data about how users most commonly book hotels. Marriott would like to increase digital bookings by 10 percent, and it’s important to understand this user behavior as a benchmark metric.

2. When picking a hotel what top things are most important to you? (check all that apply)

- Distance from location (city center, airport, etc.)

- Price

- Loyalty program (points earned or ability to use points)

- Amenities offered (fitness center, pool, restaurants)

- Hotel services (airport shuttle, bike rentals, etc.)

- Other [type other answer]

Why ask this question? Understanding user attitudes toward hotel choices is key to understanding what matters to users when making a selection. This may help refine what is advertised about a specific brand of hotel, or reinforce the need for price filtering and more upfront information about the particular hotel. It may also help to identify new organization schemes for filtering or advertising hotels.

3. If you are a Marriott Bonvoy rewards member, how satisfied are you with the rewards program overall?

- Very satisfied

- Somewhat satisfied

- Neutral/no opinion

- Somewhat dissatisfied

- Very dissatisfied

- N/A – I’m not a rewards member

Why ask this? This simple question helps to understand who is a member of the rewards program and who isn’t. It also helps to set a benchmark for satisfaction levels and gives us better insights on how well the rewards program is performing for users. This is a question we could ask after some point in time to see if changes to the website or app not only increase rewards members, but also increase satisfaction with the program overall.

4. Please rate the various benefits associated with a hotel rewards program. On a scale of 1 to 5, with 1 being the least important and 5 being the most important, rate the following:

| 1 | 2 | 3 | 4 | 5 | |

| Ability to use rewards to book free travel | |||||

| Complimentary services (free wi-fi, drinks, food, etc.) | |||||

| Dedicated customer service | |||||

| Exclusive rates | |||||

| Free room upgrades | |||||

| Mobile check-in or keyless entry to room | |||||

| Third-party offers and discounts on rental cars | |||||

| Credit card offering that gives you rewards points to use to book travel |

Other: [type answer]

Why ask this? This question helps to understand user attitudes toward a hotel rewards program. Part of Marriott’s business goals are to increase new rewards members and that starts with understanding what people value. This helps to discover certain reward benefits that may be better to advertise to entice new users to join the program. Also, insights from this may help Marriott’s business strategy and customer experience strategy to refine the rewards program to better suit its customers.

5. If you use a smartphone, have you used a hotel rewards/hotel booking app in the past year?

- Yes, I use a hotel app on my phone regularly

- Yes, I have a hotel app, but don’t use it

- No, I don’t have a hotel app on my phone

- N/A – I don’t use a smartphone

Why ask this? Answers to this question help to understand user behaviors when it comes to mobile apps for hotels. Onboarding someone for an app experience requires that the app is making its value proposition clear to users. An app that is downloaded, but not used, can indicate users don’t perceive the app helpful for them to accomplish their goals and tasks. Part of the redesign effort involves understanding what should a hotel app offer that a mobile site doesn’t?

6. On average, how many times a year do you stay in a hotel?

- More than once a week

- Once a week

- Once or twice a month

- Once or twice a year

- N/A – haven’t stayed in a hotel this year

Why ask this? This is a behavioral question that helps us to understand common customer habits when it comes to hotel stays. More than once a week may indicate a business traveler, whereas once a year may be a more leisure traveler. Part of the redesign goals are to increase digital bookings, and this question helps to separate out repeat stay users and potentially new users or infrequent users. Certain things may matter more to frequent travelers vs. infrequent and using this question we can show the data applied with this categorization.

7. Have you booked a hotel and airfare together in the past 2 years? If so, how have you booked this travel? (check all that apply)

- Marriott.com website

- Marriott mobile app

- Other hotel website

- Third-party travel site (Expedia, Priceline, etc.)

- Through a travel agent or travel company

- Airline website

- Other

- N/A – haven’t booked airfare and hotel together

Why ask this? This behavioral question helps to uncover user patterns relating to airfare and hotel coupling. First, it helps to identify the audience that has done this in the past two years, and additionally filters out those that have booked on one of the Marriott’s digital properties. It can help set a benchmark and identify users that have interacted with booking airfare and hotels with Marriott.

8. What types of online activities have you participated in the last 30 days?

- Web searches

- Shopping

- Buy or reserve travel

- Social media

- Email or IM/chat

- News/weather/sports/blogs

- Online banking / financial services

- Lookup restaurants or menus

- Order food for pickup/delivery

- None of the above

- Other: __________________________________

Why ask this? This question helps to identify a user’s most common activities online. It can help to uncover technical skill when it comes to the web, and helps to categorize users when looking at the data from other questions.

9. Into what age range do you fall?

- 18-21

- 22-37

- 38-53

- 54-72

- 73-90

Why ask this? Categorization questions like this one help to understand who the audience taking the survey is. Ideally, our recruitment for this survey matches the typical user base for Marriott’s digital products, and we can better understand in what age groups our audiences fall into. This can be helpful in the redesign process since we have a better understanding of who we are designing for.

10. What country do you live in?

- –select dropdown– (list of all countries)

Why ask this? Marriott is a global brand and this categorization question helps to learn more about Marriott’s audience. People outside the U.S. may have different values and its important to approach the redesign with information that we are designing for a global audience. This question also helps if we want to categorize particular respondents answers based on the country they live in.

11. Do you have any additional thoughts to share about hotel bookings, rewards programs, or the Marriott digital app or website? (optional)

Why ask this? This optional, open-ended question is nice to include at the end of a survey in case there are thoughts users would like to share about things not covered on the survey. This space is there for users to describe additional thoughts or opinions about booking hotels, rewards programs, or specific comments about Marriott’s digital products.

Conclusion

Keep your questionnaire short and purposeful and don’t be afraid to pre-test your survey with colleagues or friends you trust. Working out the kinks in the design of the questionnaire (i.e. do the questions make sense) before launching your survey is critical.

Happy surveying!

Usability testing is one of the most useful tools to uncover how well a website is supporting users’ main goals and tasks. Testing can occur anytime during a development of a new system, but it also can be used to discover how well a system is performing; this is often called benchmark usability testing. For the purposes of this testing, we conducted a nano usability test with three users of Marriot.com using the production live website.

Our nano usability test is a great first start to evaluating how well Marriott’s online booking system is working for users. Testing helps to uncover usage patterns, challenges encountered by users, as well as additional research questions. The purpose of testing is to not walk away knowing how to fix every issue with the system. Rather, it’s a first step to understanding where improvements might be made within the online booking system.

To kick things off, we generated three major tasks and recruited three participants.

Task completion

Overall, participants performed well. Only participant 2 had trouble understanding the larger concept of Marriott.com offering different hotel brands within the same booking experience. Users commented that the process felt “easy, and relatively quick” given two users knew a destination and dates they desired. Two out of three commented that they most likely would first compare prices with another travel website like Kayak or Priceline before purchasing.

| Task | Participant 1 | Participant 2 | Participant 3 |

| Select destination, date and details | Completed | Completed | Completed with difficulty |

| Select hotel | Completed | Completed | Completed |

| Select room | Completed | Completed | Completed with difficulty |

Participant 1: Had very little trouble picking a destination and dates from the main homepage. On the results page, they noted they liked how different hotel chains within the Marriott brand were displayed, but they couldn’t find a way to sort by price, a filtering option they often like to use. On the room selection page for the Park Central Hotel in San Francisco, they said they liked the different price options, and the prepay and save would be a feature they’d use. Often, they would pick the cheapest room option.

Participant 2: This user was using a smaller laptop and didn’t initially see the homepage call to action booking feature. They selected “Find and Reserve” which dropped down the same selections to pick a destination and date. Once they searched for Austin, Texas, on the results page, the user was briefly confused as why there were multiple hotel brands displayed. “I guess Marriott must own them,” the user questioned. Another thing the user noted on, was why the the results list showed hotels not available on the dates selected. They noticed a checkbox that filters to hotels available, but was confused why that wasn’t the default option. They selected a downtown Austin hotel and selected a prepay room; although commented on how long the title of the room was.

Participant 3: This user didn’t have a destination in mind, so they selected the Deals and Packages page, and selected the Hawaii vacations link. The system sent the user to the vacations by marriott site, a separate site with a different booking experience. On this page, featured hotels were listed but with no price. The user selected the first option, then was prompted to input a date and departure city. Once the list of results was returned, the user selected the first option for the king room. While they successfully booked a room, albeit through the vacations website, they did ask “Am I on the right site? Did I do this right?”

Main findings: user challenges

- Finding an option to sort by price was difficult

- Participant 1 had difficulty finding an option to sort the list of hotels by price, which doesn’t appear to be an option within the results page. Sorting by price is a common organization scheme that most travel sites offer. On the results page, the default scheme is to sort by distance.

- Deciding on a room type took special care, since the room’s titles are often long

- While choice is often good for users, too much can overwhelm them. In this case, selecting a room at a particular hotel was somewhat difficult. This was due to the fact that hotel rooms have long titles, with a strong font that makes it difficult to read. This can increase users cognitive load and easily lead to frustrations. One room for a Courtyard Marriott was titled, “Cool Weekend Getaway, 10 percent off 2 night stay, prepay in full, non-refundable if cancelled less than 1 day before arrival, no changes, based upon availability” in a large, bold font.

- The Marriott vacations experience is completely separate from the main Marriott.com booking experience

- One user that didn’t have a destination in mind selected the deals section of the website. After browsing about Hawaii, they were taken to the Marriott Vacation website, a separate experience that had a different look and feel. This separate site may confused users and there wasn’t any indication that you were leaving the main Marriott site.

- The hotel results list often included hotels not available or coming soon

- Even though a user selected dates for a trip, the results page often displayed hotels that were not available or “opening soon.” The option to display only available hotels was not selected by default. Showing all hotels in the list may be helpful for someone that is browsing, but could be frustrating to someone that knows the dates they want to travel. More results in the list means more items users have to scan through, which can increase cognitive load.

Additional user research questions

Our test stopped at the payment section and was narrowly focused only on the booking process. Since we were running a quick nano usability test, we didn’t have time to prepare fake information to use in the payment step. However, this is a critical step that deserves to be tested in the future. In addition, further research questions may surround:

- What additional challenges are encountered by user attempting common tasks on Marriott.com? Tasks could include:

- Changing or canceling a reservation

- Signing up for loyalty program/credit card

- Contacting customer service

- What substantial usability impediments exist? (items that may influence a user to abandon the website or booking process)

- What are additional customer insights that may help Marriott’s digital strategy?

One of the most important next steps is to ensure Marriott’s website has clearly identified and measurable goals. Understanding Marriott’s goals and business strategies will help determine research activities to further improve Marriott’s digital products.

Conclusion

Conducting user research helps designers conceptualize systems and processes that match how users think. Designing an experience that just plain works well doesn’t happen with luck. Through proven research methods and user engagement, organizations can build products with users, rather than “for” users. Involving users in prototyping, development, and testing, is a necessary step that helps give a product a higher chance of success.

As demonstrated with the nano usability test, research doesn’t have to be a long, drawn-out process. With only three users, we were able to quickly identify areas of the booking process to explore. This quick testing method gave us insights that we didn’t have before. Testing often allows a continuous check-in on how a design is performing and gives organizations insights into how to improve their products. Research is a great way to ensure designs match user expectations. Any form of research, big or small, assists with improving the user experience for users and helps to align digital products with the goals of an organization.

Usability testing is one of the most useful tools to uncover how a website works for users. With effective planning, recruitment, and execution, any UX team or individual will be able to uncover a site’s main pain points and formulate recommendations that will help to improve the overall user experience in very little time.

A method of usability testing that can be useful to engage with in the beginning of a project is simply discount usability testing. These tests are planned with a small amount of users, since we are not looking for statistical significance in terms of usability issues. Instead, you are going to plan a test and recruit users to try and identify qualitative information and observe how users interact with our website while conducting a number of tasks. To accomplish this, we don’t need to test with more than seven users (generally).

Here’s how I would approach it.

Number of participants

For our tests, we only need to recruit eight testers — one being an alternate in the event of a cancellation. We are aiming for seven users for a number of reasons. According to Jakob Nielsen, an expert on usability and user-centered design, after testing with more than five users, you start to see the same issues and don’t learn additional insights. Our limited time frame only gives us a chance to run one round of usability testing. With that, we need to maximize our time with our testers, and recruiting eight testers (one alternate) is our best course of action.

Testing with only a few participants

Testing with less than 10 users may appear insignificant to draw major conclusions around a website’s usability issues. However, industry research tells us that we can uncover nearly 75% of the major usability issues in five sessions or less (Nielsen 1993). Again, we aren’t looking for statistical significance since our method of usability testing is not conducted in a true academic sense. Instead, we are attempting to identify what about a product is difficult to use. We will use direct observation, and encourage our testers to “think aloud” as they perform the tasks set for them. This will help us uncover the major usability issues that are contributing to a website’s main issues.

In addition, the severity of issues uncovered during usability tests are also related to the first few participants in the rounds of testing. In other words, usability issues and their severity and frequency are significantly correlated, which implies that severe issues are commonly discovered during the first few tests (Virzi 1992). This means we can get more value out of only testing with seven users since it will save us considerable amount of time, while still giving us strong usability insights.

The testing plan

Our testing plan is designed to accommodate the development team’s agile software development process. We will be efficient with our time, but take real consideration when we are drawing conclusions, findings, and recommendations in order to be smart about any changes we propose to the website. While the development team is working in week-long sprints, the UX group will need three weeks to complete our work.

| Week 1 – Plan & Recruit users | -Begin recruiting eight users for testing -Develop facilitator script, key tasks and questions for participants, and collaborate with the the development and business teams to make sure our tasks are reflecting our site’s main tasks -Begin to schedule usability sessions with participants -Brainstorm a possible honorarium we can provide testers (gift card, cash, etc.) |

| Week 2 – Test | -Conduct usability test sessions in person at our usability lab (UX group facilitator will lead sessions)Invite development team or interested parties to observe remotely -After each session there will be a debrief with anyone that observed, and we will discuss top user pain points, user emotional reactions (nonverbal body language) and any other issues -Sessions will be limited to two per day |

| Week 3 – Analysis & Recommendations | -Analyze usability findings and synthesize major themes -Develop recommendations that address major findings -Depending on severity of issues uncovered, work with development team to fold changes into weekly sprints thereafter. |

In addition to the first round of usability testing, I would recommend building this type of iterative testing into your software development process. Since we can observe significant usability issues with only a few tests, conducting more regular analysis will help to proactively identify usability issues that otherwise could hurt our sales and frustrate our users.

This usability testing plan allows you to work efficiently and will not cost us much money. While we often have a limited timeframe, our tests are planned to maximize our testing resources. Considering no other issues come up, the a UX group should be able to plan, test, debrief, and recommend development changes to the site just in time to not upset your scrum master.

Brightline App Mobile Usability Testing

Brightline is a new high speed train service in Florida. We were tasked with testing the booking app and related top tasks. Using moderated in-person usability testing, we recruited nine participants and generated a findings and analysis report based on our analysis.

The Problem

We wanted to conduct usability testing to identify potential improvements for the booking app.

Our research goals were to:

- Observe user patterns and behaviors as they attempt a set of common tasks using the mobile application

- Identify how easily users accomplish common tasks

- Understand users’ experiences and expectations as they work with the app

- Gather user attitudes and perceptions as they relate to this type of booking app

Approach

Our formative usability study followed the “think aloud” protocol by asking test participants to describe their thoughts as they attempted to complete three separate tasks. We recruited nine participants for this study. We used a moderated in-person testing method.

Results

- Each video was observed, which helped surface patterns and themes based on how users navigate the app and attempt the tasks.

- From the initial observations and findings, themes were sorted and given prioritization based on frequency of the issue and other qualitative feedback. Post-test questions also help to influence the findings.

- We then developed recommendations that address several of the findings.

Main takeaways:

- The booking process is clear and the app gives users a straightforward entry point to begin booking a trip.

- The app’s overall performance and technical issues can easily frustrate users.

- Aspects of the seat selection task in the booking process has room for improvement.

Lessons learned

- Usability testing on mobile apps can be difficult to see how a user is interacting with the app. Having a way to record the phone screen or video record the phone is important so you can later view how the user is interacting with the app.

- If possible, make users comfortable and allow them to use their phone. If they are not comfortable downloading an app, have a backup phone ready for them to use. People are often the most familiar with their own devices.

- Probing users to “think aloud” is an important reminder when conducting in-person moderated usability testing.

Weather Underground Usability Testing

I conducted remote unmoderated usability testing for the Weather Underground website. We tested top tasks on the site and gained user insights into how well the site works for them.

The Problem

We tested Weather Underground’s website based on common tasks with the intention to see how well the site performs.

Our research goals were to:

- Observe user patterns and behaviors as they attempt a set of common tasks

- Identify how easily users accomplish common tasks

- Understand users’ experiences and expectations as they work with the website

Approach

Our formative usability study followed the “think aloud” protocol by asking test participants to describe their thoughts as they attempted to complete three separate tasks. We recruited five participants for this study. We used an unmoderated remote testing service called Validately, which recorded participants screens and audio. Four out of five testers check the weather regularly.

While five participants may appear small, most common usability issues can be identified with a small sample since we are more focused on qualitative feedback and general experiences.

How we analyzed the data:

- Each video was observed, which helped surface patterns and themes based on how users navigate the website and attempt the tasks.

- From the initial observations and findings, themes were sorted and given prioritization based on frequency of the issue and other qualitative feedback. A set of questions were asked after the test, which also influenced the findings.

- Using the findings, we developed recommendations or identified further areas of research.

Results

Main takeaways include:

- Users easily found a way to find local weather forecasts.

- Navigation groupings and labels confused users for two of the tasks.

- The website features large ads that can affect how users find information and use navigation.

- Static content pages and their organization could be improved.

Lessons Learned

While unmoderated remote usability testing offers an efficient way to test, its not as personal as in-person and you may have to test more remote users due to technical issues encountered during testing.